Take, for example, you want to predict the word in sentence The ( ) jumped over a pond. Ground truth is frog. In this sentence, it is hard to predict the blank with prior information only : The. In this case, it is better to use the information behind the blank : jumped over a pond. Likewise, it is important to learn backward (from future to present) as well as forward (past to present).

Structure

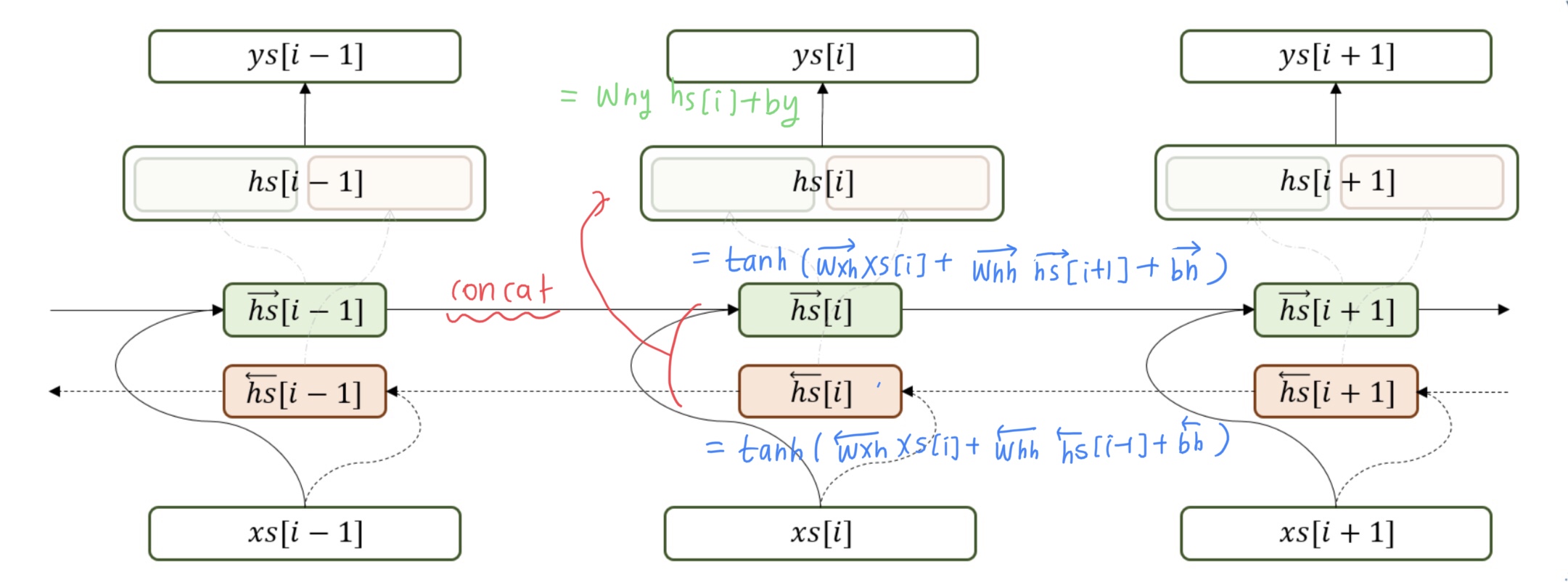

(orginally from Woosung Choi’s blog)

The structure is very simple. The difference is that hidden layer is a concatenation of forward and backward hidden units. If you change the hidden units with LSTM, it becomes biLSTM. So simple!

Code

(to be updated)